Teslaについて過去ツイートを纏めてみました (Memo from my past tweets)。

Tesla Memo [1/3] : Tesla Autonomy Investor Day, Apr 2019 - Sep 2020

Tesla Memo [2/3] : before Tesla AI Day, Aug 19, 2021

概要

- Tesla AI Day, Aug 19, 2021

- Tesla CFP8: 8-bit Configurable Floating-Point

- Hybrid 8-bit Floating Point (HFP8) Training and Inference for DNNs, IBM

- 2x Dojo D1 Chip Module?

- Comparative Study on Power Module Architectures for Modularity and Scalability", Review, Dec 2020

- "Scaled compute fabric for accelerated deep learning", Cerebras, Patent Application, Mar 5, 2020 (Aug 28, 2018)

- 最後に

- おまけ

- AMD Exascale APU, Chiplets

- ※ページ内リンク設定が解らないのでスクロールして下さい。

Tesla AI Day, Aug 19, 2021

※Dojo紹介から Ganesh Venkataramananさんの発表。Venkataramananさんは前エントリーのこの特許の共同発明者

"Packaged device having embedded array of components", US20200221568A1

Tesla

Sr Director Autopilot Hardware at Tesla

Dec 2018 – Present

Director Autopilot Hardware

Mar 2016 – Dec 2018

AMD

Senior Director Design Engineering

Jul 2014 – Mar 2016

...Senior Design Engineer/ Design Engineer

Jun 2001 – Jun 2004

...

High Performance Training Node

64 bit Superscalar CPUVector Dispatch with 4x (8x8 Matrix Multiplication) & SIMD

1024 GFLOPS: BFP16, CFP8 (8 bit Configurable Floating Point)64 GFLOPS: FP32

Int32, Int16, Int8128 MB ECC SRAMLow-Latency High Bandwidth 2-D Network Switch (1 Cycle Hope)

512 GB/s in Each Cardinal Direction

D1 Chip

354 Training Nodes

362 TFLOPS: BFP16, CFP8

22.6 TFLOPS: FP32

10 TBps/dir: On-Chip Bandwidth

4 TBps/edge: Off-Chip Bandwidth400 W TDP

500,000 nodes

Dojo Interface ProcessorPCIe Gen4 for HostTraining TileHigh Bandwidth DRAM Shared Memory for the Computer planeHigher Radix Network Connection

25x Known Good D1 Die onto Fan-Out Wafer9 PFLOPS: BFP16, CFP89 TBps/edge: Off-Tile BandwidthVertical Directory Connect Voltage-Regulator Module (VRM)52 V DC<1 cu Ft

2021年8月19日の前の週に初めて Training Tileが稼働 (2 GHz)

前エントリーで紹介した実装に関連するだろうな特許

"Voltage regulator module with cooling structure", US10887982B2

Mohamed Nasr, Aydin Nabovati, Jin Zhao

2018-03-22: Priority to US201862646835P...2019-09-26: Publication of US20190297723A12021-01-05: Publication of US10887982B22021-01-05: Application granted

2018-09-19: Priority to US201862733559P...2020-03-26: Publication of WO2020061330A1

Tesla CFP8: 8-bit Configurable Floating-Point

Dojo Chipでサポートの CFP8: 8-bit Configurable Floating-Pointについて Tesla AI Dayでは詳細はありませんでしたが、前エントリーで紹介した Teslaの特許を再確認したところ:

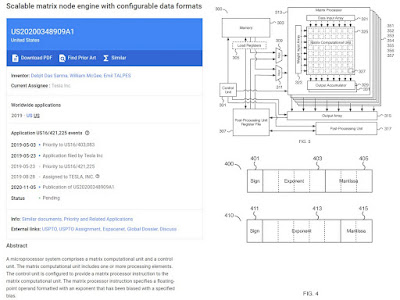

"Scalable matrix node engine with configurable data formats", US20200348909A1

Debjit Das Sarma, William McGee, Emil TALPES

2019-05-03: Priority to US16/403,083...2020-11-05: Publication of US20200348909A1

Abstract

"The matrix processor instruction specifies a floating-point operand formatted with an exponent that has been biased with a specified bias."

FIG. 4 is a block diagram illustrating embodiments of an 8-bit floating-point format.

1-4-3 (400)1-5-2 (410)

"Depending on the use case, one or another format is selected. In the event a high precision number is needed, more bit can be allocated to the mantissa and a format, such as format 400 with more mantissa bits than format 410, is selected. A format with more mantissa bits may be selected for performing gradient descent where very small deltas are required to preserve accuracy. As another example, a format with more mantissa bits may be selected for performing forward propagation to compute a cost function. As another optimization, each floating-point format utilizes a configurable bias."

FIG. 5 is a block diagram illustrating an embodiment of a 21-bit floating-point format.

1-7-13 (500)

"Using 8-bit elements as input elements and storing the intermediate results using a 21-bit format preserves the precision and accuracy required for training while also maintaining high input bandwidth to the matrix processor."

さらに 27-bit形式も考慮しているようですが、図の説明はありません。

27-bit floating-Point

1-9-17

Hybrid 8-bit Floating Point (HFP8) Training and Inference for DNNs, IBM

ところが、複数形式の 8-bit Floating-pointを用いた学習と推論は IBMが NeurIPS 2019のポスターで発表、さらにその前に特許申請・認定済み:

"Hybrid 8-bit Floating Point (HFP8) Training and Inference for Deep Neural Networks",

Xiao Sun, Jungwook Choi, Chia-Yu Chen, Naigang Wang, Swagath Venkataramani, Vijayalakshmi (Viji) Srinivasan, Xiaodong Cui, Wei Zhang, Kailash Gopalakrishnan, IBM, Poster, NeurIPS 2019

(1-4-3) for forward propagation(1-5-2) for backward propagation

"Finally, through hardware design experiments, we’ve confirmed that floating-point units (FPUs) that can support both formats are only 5% larger than the original FPUs that only support 1-5-2."

"For errors and gradients in HFP8 back-propagation, we employ the (1-5-2) FP8 format, which has proven to be optimal across various deep learning tasks."

当然ながら、特許の手続きが進められてました:

"Hybrid floating point representation for deep learning acceleration", US10963219B2

Naigang Wang, Jungwook CHOI, Kailash Gopalakrishnan, Ankur Agrawal, Silvia Melitta Mueller

2019-02-06: Application filed by IBM...2020-08-06: Publication of US20200249910A12021-03-30: Application granted2021-03-30: Publication of US10963219B2

"Neural network circuitry having floating point format with asymmetric range"、US20210064976A1

Xiao Sun, Jungwook CHOI, Naigang Wangm, Chia-Yu Chen, Kailash Gopalakrishnan

2019-09-03: Application filed by IBM...2021-03-04: Publication of US20210064976A1

ISSCC 2021ではチップ実装を発表:

"Introducing the AI chip leading the world in precision scaling", Jan 18, 2021

"A 7nm 4-Core AI Chip with 25.6TFLOPS Hybrid FP8 Training, 102.4TOPS INT4 Inference and Workload-Aware Throttling",

Ankur Agrawal, SaeKyu Lee, Joel Silberman, ..., Kailash Gopalakrishnan, IBM, ISSCC 2021

FP8-fwd (1, 4. 3)FP8-bwd (1, 5, 2)FP9 (1. 5. 3)

※FP8-fwd、FP8-bwdを FP9に拡張変換して演算を実行、賢い※他にも精度を保つための演算器の精度に工夫等々

"IBM Brings 8-Bit AI Training to Hardware", Feb 26, 2021

IBMと Teslaの特許出願日:

"Hybrid floating point representation for deep learning acceleration", IBM

2019-02-06: Application filed by IBM

"Scalable matrix node engine with configurable data formats", Tesla

2019-05-03: Priority to US16/403,083

2019-05-23: Application filed by Tesla

"Neural network circuitry having floating point format with asymmetric range", IBM

2019-09-03: Application filed by IBM

機械学習の Forward propagationと Backward propagationで同じビット幅で異なる浮動小数点表現を採用する特許の申請は IBMが数ヶ月先行しているので、Tesla CFP8: 8-bit Configurable Floating-Pointでの学習アルゴリズムは慎重さが求められそうです (両社は逆のようですがこの違いは本質では無いでしょう)。

2x Dojo D1 Chip Module?

Tesla AI Dayで、1チップ D1 Chipが公開

車載向け小規模モジュール化に関係するかもしれない実装系の特許申請:

※ラストは Ganesh VENKATARAMANANさん。

"Electronic assembly having multiple substrate segments", US20210005546A1

Mengzhi Pang, Shishuang Sun, Ganesh VENKATARAMANAN

2017-12-08: Priority to US201762596217P...2021-01-07; Publication of US20210005546A1

Comparative Study on Power Module Architectures for Modularity and Scalability", Review, Dec 2020

Dojo Training Tileの実現には Teslaのさまざまなノウハウが活かされているとのことで、何かしらの参考になるかもしれない Tesla Model 3 Inverterも含まれる車載 Power Moduleのサーベイ論文:

"Comparative Study on Power Module Architectures for Modularity and Scalability", Review, Journal of Electronic Packaging, Dec 2020

Tesla Model 3 Inverterレポートのサンプル

[Sample] STMicroelectronics SiC Module: Tesla Model 3 Inverter, Jun 2018

"Scaled compute fabric for accelerated deep learning", Cerebras, Patent Application, Mar 5, 2020 (Aug 28, 2018)

前のエントリーで触れた Cerebrasのモジュール構成システムの特許申請

"Scaled compute fabric for accelerated deep learning", WO2020044152A1

Gary R. Lauterbach, Sean Lie, Michael Morrison, Michael Edwin JAMES, Srikanth Arekapudi

2018-08-28: Priority to US201862723636P...2019-08-11: Application filed by Cerebras Systems Inc.2020-03-05: Publication of WO2020044152A1

"An array of clusters communicates via high- speed serial channels.

The array and the channels are implemented on a Printed Circuit Board (PCB).

Each cluster comprises respective processing and memory elements.

Each cluster is implemented via a plurality of 3D-stacked dice, 2.5D-stacked dice, or both in a Ball Grid Array (BGA). "

"A memory portion of the cluster is implemented via one or more High Bandwidth Memory (HBM) dice of the stacked dice."

"PE Cluster 481 comprises 64 instances of PE 499 on a die, each PE with 48KB of local memory and operable at 500MHz. PEs+HBM 483 comprises the HBM2 3D stack 3D-stacked on top of the PE die in a BGA package with approximately 800 pins and dissipating approximately 20 watts during operation. There is 4GB/64 = 64MB of non-local memory capacity per PE. "

"The 48KB local memory of each PE is used to store instructions (e.g., all or any portions of Task SW on PEs 260 of Fig. 2) and data, such as parameters and activations (e.g., all or any portions of (weight) wAD 1080 and (Activation) aA 1061 of Fig. 10B). "

[(64x PE (48 KB local Memory)) + HBM2: 4GB (64 MB/PE)]

※特許申請なので実際に進めているかは不明ですが、今後に注目。

最後に

nikonikotenさんのコメント

「しかし、車メーカーがAI用のHPCを開発するとは。全ての技術の垂直統合を進めるのは圧巻です。こういったメーカーが今まであったでしょうか。」

おまけ

Jim Kellerさんが AMDを辞めて Teslaに移ったのが 2106年2月

LinkedInによると

"Scalable matrix node engine with configurable data formats", US20200348909A1

Debjit Das Sarma, William McGee, Emil TALPES

3名が AMDから Teslaに移られたのが 2016年2月、4月。

Jim Kellerさんが 2020年12月に CTOに就任された Tenstorrent社の設立は 2016年5月、Ljubisa Bajicさん (Founder and CEO) の前職は AMD。

大きなくくりではどちらもメニーコアを採用

2015年は AMDの Exascaleへの取り組み、アーキテクチャの方向性:CPUと GPUを統合した APUや EPYCに繋がる Chipletが確定した時期の可能性が高く、方向性の違いで Jim Kellerさんらが AMDを去った?

"DOE's Fast Forward and Design Forward R&D Projects: Influence Exascale Hardware", Sandia National Lab, Feb 2015

"Design and Analysis of an APU for Exascale Computing", AMD, Industry Session, HPCA 2017

※AMD APUの現状は???

Chiplets

"NoC Architectures for Silicon Interposer Systems", Natalie Enright Jerger, Ajaykumar Kannan. ..., Gabriel H. Loh, University of Toronto and AMD, Micro 2014

"Enabling Interposer-based Disintegration of Multi-core Processors", Ajaykumar Kannan, Natalie Enright Jerger, Gabriel H. Loh, University of Toronto and AMD, Micro 2015

https://dl.acm.org/doi/10.1145/2830772.2830808

"Exploiting Interposer Technologies to Disintegrate and Reintegrate Multicore Processors", Ajaykumar Kannan, Natalie Enright Jerger, Gabriel H. Loh, University of Toronto and AMD, IEEE Micro, May/Jun 2016

"Enabling Interposer-based Disintegration of Multi-Core Processors", Ajaykumar Kannan, MSc Thesis, Nov, 2015

Acknowledgements "to thank Gabriel Loh at AMD Corp"

Gabriel H. Lohさんの ISCA 2021での発表と講演スライド (いずれビデオ公開のはず)

"Understanding Chiplets Today to Anticipate Future Integration Opportunities and Limits", Gabriel H. Loh, et al., AMD, Industry Track, ISCA 2021

"An Overview of Chiplet Technology for the AMD EPYC and Ryzen Processor Families", Gabriel Loh, Senior Fellow, AMD, IEEE SCV - Industry Spotlight, Aug 24, 2021

AMD Chiplet (NoC) についての Ian Cutressさん (AnandTech) の興味深い考察

"Does an AMD Chiplet Have a Core Count Limit?", Sep 7, 2021

今後の Chipletの進展には NoCの工夫が必須。

0 件のコメント:

コメントを投稿